Collective dynamics in recurrent neural networks

Computation in the brain is performed by recurrent networks which exchange information in the form of brief pulses (spikes). Information processing is accompanied by collective phenomena such as synchronization, oscillation and spike avalanches. We are interested in understanding how such collective dynamics emerge from the interplay of single neurons and neuron populations, and how this dynamics supports fundamental aspects in information processing such as feature integration, neural inference, and adaptive computation.

Examples from current research projects:

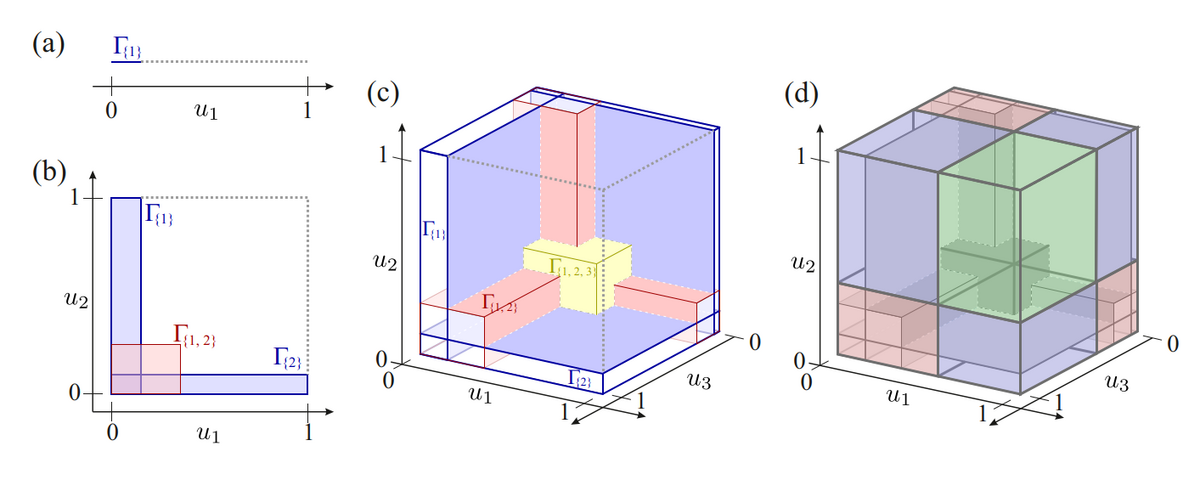

Theory of avalanche formation

Recurrent networks exhibit spontaneous synchronization in the form of spike avalanches. Depending on coupling strength, such networks undergo a transition from an unordered, asynchronous to an ordered, highly synchronous regime via a so-called critical state. One of our aims is to achieve a fundamental understanding of avalanche dynamics in recurrent systems, for which we recently developed a rigorous mathematical framework. This fundamental understanding allows us to pinpoint the benefit of spontaneous synchronization for selective information integration, and to shed light on the role of criticality in neural information processing.

Feature integration in convolutional neural nets

Information integration in the visual system is a highly complex process which involves many different brain areas. However, most computational aspects of this process are not yet understood. And although deep convolutional networks reach or surpass human performance, e.g. in object recognition, it is unclear what insights AI approaches provide us for brain research. Our lab combines its year-long experience in investigating feature integration in the visual system with machine learning techniques to advance our understanding of visual computation. For example, in a recent study we trained a shallow convolutional network subject to anatomical and physiological constraints on a contour integration task. It turned out that surprisingly few neural ‘hardware’ is required to solve a complex task, and provided new, experimentally testable ideas about neural mechanisms allowing the efficient integration of information in vision.