Flexible information processing and integration in modular networks

In contrast to machines, networks in the brain have a limited amount of neural resources and information processing is constrained by the biophysics of synapses and neurons. Exposed to the many challenges of complex environments, mammalian brains have therefore evolved strategies to flexibly re-use existing networks and structures of the brain for rapidly adapting to changing computational needs.

We want to understand how flexibility is achieved, and how networks have to be shaped to support this ability.

Making perceptrons flexible

For example, we are studying how to train a novel class of artificial neural networks (‘FlexiTrons’) to switch instantly between computing different Boolean functions. These networks evolve connection structures that are optimized for both flexibility and function. By introducing biophysical constraints, we discovered a tradeoff between flexibility and robustness, which can be improved by stacking multiple FlexiTron modules in a hierarchical manner like in the visual cortex.

Routing by Avalanches

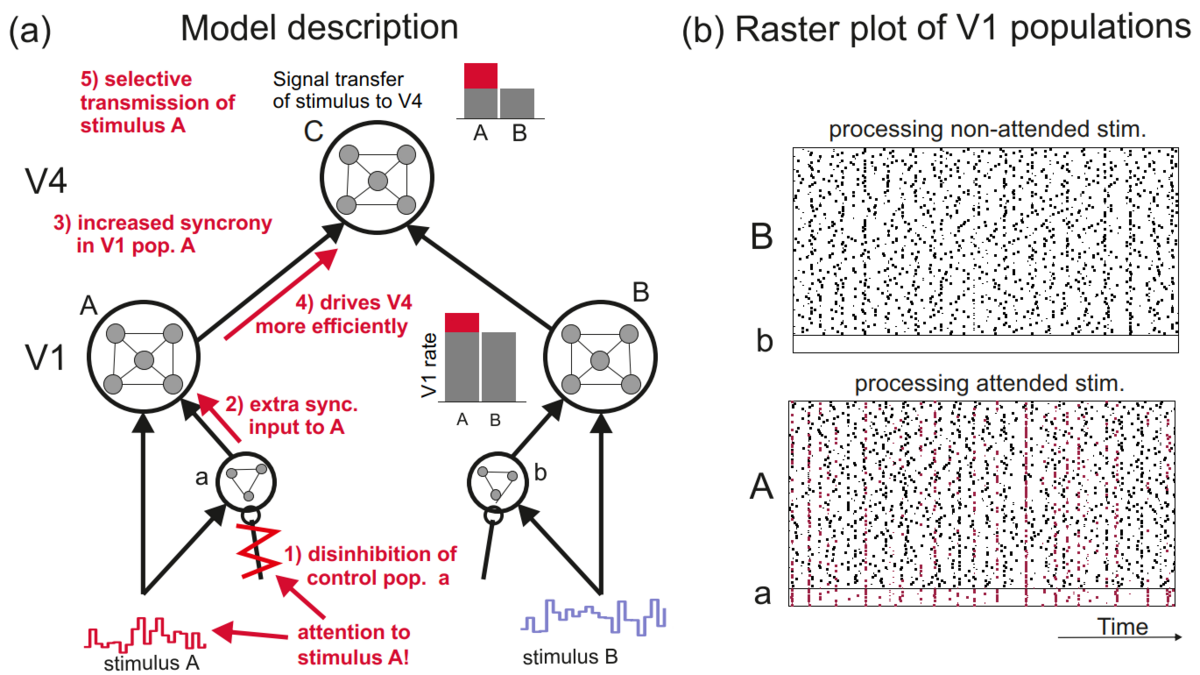

In parallel, we used our theory on spike avalanches in recurrent networks to propose a new scheme for selectively routing visual information. By increasing synchrony in the neural representation of an attended stimulus, one can boost the information delivered to other visual areas by a factor which is several times higher than the associated modulation in neural firing rates, thus giving an elegant explanation for physiological data.