MAPS

MAPS – Myoelectric Array-based Processing of Speech

Speech is the most natural and convenient form of communication between humans, and mobile phones and speech-controlled electronic systems have made spoken communication even more important. However, speech recognition systems which are based on acoustic speech create several problems, for example a deterioration of communication and accuracy in the presence of ambient noise, disturbance for bystanders, and lack of privacy. Additionally, speech-disabled persons are excluded from this form of communication.

These problems are addressed by Silent Speech Interfaces, which have been researched for several years by Cognitive Systems Lab. Our speech recognizer based on surface electromyography is the world's most powerful system of its type.

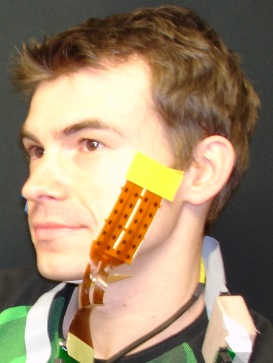

The project MAPS – Myoelectric Array-based Processing of Speech – started on January 1, 2013. In this project, we will extend the capabilities of the existing system by integrating a new technology: Multi-channel electrode arrays enable us to simultaneously record a large number of EMG channels. This yields several advantages, for example, increased spatial resolution, robustness with respect to position shifts, increased usability and improved means of separating muscle signals and artifacts.

MAPS aims at systematically investigating the possibilities which arise from the use of multi-channel EMG arrays. This includes improved usability, innovative signal processing, and the collection of a large data corpus which will in time be made publicly available.

The MAPS project is being funded by German Research Foundation (Deutsche Forschungsgemeinschaft DFG) in the years 2013 to 2015.